I’m so glad that The New York Times ran this op-ed (Artificial Intelligence’s White Guy Problem) about the inherent biases in Artificial Intelligence algorithms. Popular culture and much media coverage of AI tends to mysticize how it works, neglecting to point out that any machine learning algorithm is only going to be as good as the training set that goes into its creation.

Delip Rao, a machine learning consultant, thinks long and hard about the bias problem. He recently gave a fascinating talk at a machine learning meetup where he implored a room of machine learning engineers to be vigilant in making sure their algorithms were not encoding any hidden bias.

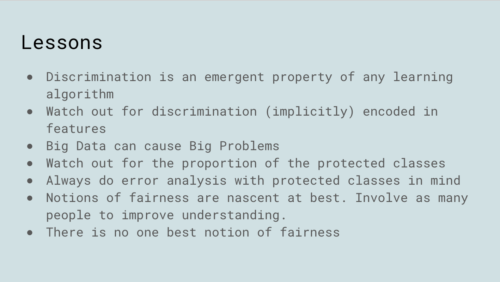

The slides from his talk are posted online but Delip’s final takeaway lessons have stuck with me and are good to keep in mind whenever you read stories of algorithms taking on a mind of their own.

It is still very early days and many embarrassing mistakes have been made and more will be made in the future. Our assumption should be that every automated system is fallible and that each mistake is an opportunity to make things better (both ourselves and the algorithm) and should not be an indictment of the technology.

Leave a comment