same as it everwas

-

239 Play & City Island

I’m always looking for excuses to explore a new neighborhood so when I read about 239 Play, also known as “Dan’s Parent’s House,” I knew I had to check it out and visit City Island. I took the ferry from 34th Street two stops to Ferry Point Park by Throgs Neck, just a 30 minute…

-

The Joy of Happenstance

John Battelle has a wonderful reminisce about what’s lost as information moves from analog to digital. He specifically writes about the college course catalog and what’s lost as these guides have moved online in Digital is Killing Serendipity. In the comments, I shared an experience I had with a printed catalog at the Edinburgh Festival…

-

Video: Simon Willison on AI

Simon Willison has been hacking on technology for years and blogging about it in his excellent blog where he posts on how to recreate his innovations and follow along on his adventures. He was a speaker at this year’s WordPress WordCamp US 2023 conference and gave a talk that I would highly recommend to anyone…

-

Sitemaps for AI

Last week, I was double-booked in conferences. Wednesday & Thursday I was in Philadelphia for the beginning of the Online News Association conference, a gathering of journalists who work with words online. Friday & Saturday, I was in Washington DC for WordCamp, a gathering of people who work with WordPress, the CMS software that powers…

-

Urban Downhill Racing

Insane FPV of Urban Downhill Racer Tomas Slavik and how they did it.

-

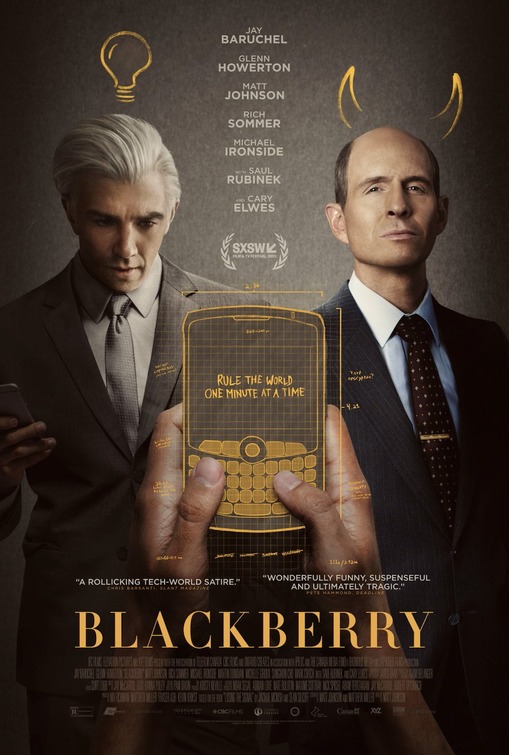

Vampires in Waterloo

There’s a scene in the BlackBerry movie (I rented it of Amazon) where Jim Balsillie, played by “It’s Always Sunny in Philadelphia” star Glenn Howerton, goes off on the NHL board over a decision he does not like. It’s a full-blown meltdown with a bizarre line thrown in there that sticks in the back of…

-

Phish and Guy Forget

The more I learn about this crazy band the deeper I sink into the mythology. I caught the last two nights of Phish’s seven day “residency” at Madison Square Garden last week. The usual superlatives apply about how the band is playing better than ever. The guy who does the lights even had a profile piece…

-

MonsterTrack

I fell down a rabbit hole the other night watching cyclists on fixed gear bikes ride like maniacs thru busy urban streets. It all started when I ran across this viral video promoting designer Aimé Leon Dore by putting their Knit Cycling Jersey on the back of a rider navigating the busy streets of Manhattan.…

-

Happy ‘merican Day

Have a Happy (and safe) Forth of July! In case you’re wondering if everyone made it out ok, including the Honda Odyssey in the driveway, they’re fine, if a little chastened by their unexpected fame. Echos of the 2012 San Diego fiasco when an entire evening’s worth of fireworks went off all at once.

Who am I?