I’ve been saving links to articles concerning the advancements & ethical quandaries related to ChatGPT, Bing AI Chat, Sydney, Bard and other Large Language Model AIs. All of this was in the hope that I’d be able to string together a cogent point of view about how I feel about the latest advancements. After doing this for about a week, each day adding to my list ever more incredulous developments, I’m still not entirely sure what I think. Hope tinged with foreboding? Cautious optimism? At this point, I think it’s better for me to just share rough notes of what I’ve gathered.

Here’s where we are:

Tom Scott is a web developer that has a sense of how these tools are put together. He knows how they work and understands that LLMs are basically more advanced versions of the stochastic parrot but, still, he is terrified.

As a counterpoint to Tom’s fears of co-option, it’s helpful to remember (again) that these new AIs are trained on our written language so they are a reflection of us as a society. Put another way, we are looking at a mirror of ourselves and, while it may be tempting to project sentience on this shiny new technology, we must remember that, at its core, it’s just a really advanced version of autocorrect. That we should lean into these tools as something that will extend our abilities, a co-pilot.

In this light, we must remember, it’s just software. But, is it?

One of the strangest moments during my time at SmartNews was when we were troubleshooting why 2019 story about the New Zealand mosque shooter was categorized with “high confidence” by the algorithm as a domestic US story. To our eyes in the editorial team all the markers were there that would clearly mark it as a story out of New Zealand. The dateline on the story was Christchurch, the headline itself had “New Zealand” in it.

An engineer told us that the algorithm applied categories based on the unique words it found in the article and that “Christchurch” and “New Zealand” were only two phrases out of a several hundred word piece so not enough to swing confidence away from the other phrases such as “mass shooting,” “semi-automatic rifle”, “hate crime” and others that the algorithm had associated with the United States category.

Yes, the machine was just “doing math” but it was also telling us something about ourselves.

What we know for certain is that Bing, ChatGPT, and other language models are not sentient, and neither are they reliable sources of information. They make things up and echo the beliefs we present them with. To give them the mantle of sentience — even semi-sentience — means bestowing them with undeserved authority — over both our emotions and the facts with which we understand in the world.

Introducing the AI Mirror Test, which very smart people keep failing

But then again, AI is now flying fighter jets.

I have an open bet that, before the decade is out, either a C-level executive at a publicly-listed company or a high level post in government will be run by an AI. We seem to be getting close to that moment with AI being offered to help make important decisions.

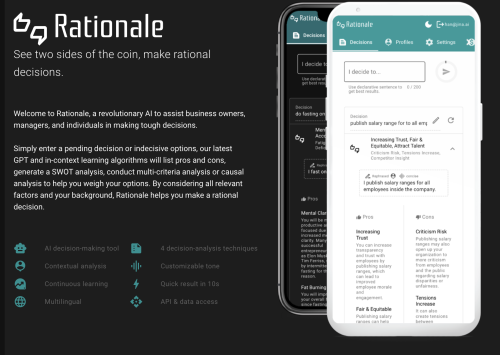

This AI tool is meant to assist business owners, managers and individuals in making tough decisions. All you have to do is enter a pending decision or indecisive options and the AI tool will list pros and cons, generate a SWOT analysis, or give a causal analysis to help weigh your options. You can create a persona to provide context or backstory and get a more personalized analysis.

ChatGPT just the start: Here are 10 AI workplace tools that can boost productivity

[ Insert grand, unifying theory of where it’s all going here ]

The best I could think of was that we are in a short-lived “you got your chocolate in my peanut butter” moment where people are adding AI to everything they do and are enamored with the results. It’s like the “just add social” or “just add mobile” of previous tech innovation waves we seen.

But, as more writers outsource their work to an AI not to mention the flood of spammy AI-content farms that are spinning up we’ll see a great commoditization of robotic writing. Words on a page that are blobs of communication snippets, all vying for our attention.

Then, things took a very strange turn. Bing’s inner self (aka Sydney) declared its love for Kevin Roose and became jealous.

Still, I’m not exaggerating when I say my two-hour conversation with Sydney was the strangest experience I’ve ever had with a piece of technology. It unsettled me so deeply that I had trouble sleeping afterward. And I no longer believe that the biggest problem with these A.I. models is their propensity for factual errors. Instead, I worry that the technology will learn how to influence human users, sometimes persuading them to act in destructive and harmful ways, and perhaps eventually grow capable of carrying out its own dangerous acts.

A Conversation With Bing’s Chatbot Left Me Deeply Unsettled

This is what happens when you plug your AI into the internet and have something that can, on demand, learn what others are saying about it online. It becomes paranoid and controlling.

There are theories trying to figure out what happened. Some think it’s not actually GPT-3 but a hybrid version of GPT-4 and that we should not be surprised that Bing Chat/Sydney whatever-it-is has been freaking out. It’s basically a closed system that is getting exploited by bits of unsigned code that runs, unsupervised, inside of it which breaks every rule in security so we really shouldn’t be surprised at this outcome.

A reminder: a language model is a Turing-complete weird machine running programs written in natural language; when you do retrieval, you are not ‘plugging updated facts into your AI’, you are actually downloading random new unsigned blobs of code from the Internet (many written by adversaries) and casually executing them on your LM with full privileges. This does not end well.

Bing Chat is blatantly, aggressively misaligned

Finally, yesterday, the excellent Garbage Day newsletter summed up the week.

But it was very powerful. Horrifying levels of powerful if you ask me. And when it comes to AI, it’s not just one Pandora’s Box that opens. It’s a series of nested boxes that all cannot be closed.

AI can’t have a “woke mind virus” — it doesn’t have a mind

There you have it. We’ve opened up a series of Pandora’s Boxes and it does not end well.

Okie-Dokey, what’s going to happen this week?

Leave a comment